Agentic AI is already reshaping clinical care, hospital operations, and patient engagement. Yet misconceptions—ranging from clinician replacement fears to safety concerns—often delay adoption.

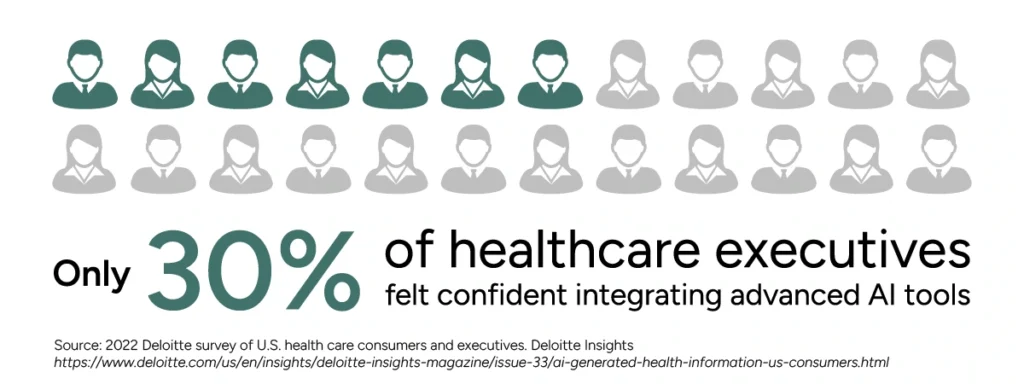

A Deloitte survey found that only 30% of healthcare executives felt confident integrating advanced AI tools, citing culture, regulation, and workflow disruption as barriers.¹ Physicians also worry AI could undermine autonomy and humanism, despite evidence that AI reduces errors and administrative burden.

In reality, agentic AI is proving its value today. Emerging evidence shows AI can reduce diagnostic errors, streamline administrative tasks, and improve clinician well-being 2,3 suggesting that, with thoughtful design and oversight, AI can support humanistic, trusted care delivery.

By separating myths from realities, healthcare leaders can make informed, strategic decisions about when and how to deploy agentic AI designed for improved care.

Myth #1: Agentic AI Will Replace Clinicians

Automation anxiety, media hype about “doctor-beating” algorithms, and worsening physician shortages—projected at 87,000 in the U.S. by 2037 4 —fuel fears that AI could substitute for human clinicians. Many worry that health systems under pressure may deploy AI to replace rather than support physicians.

Reality: Agentic AI strengthens clinical decision support and reduces physician burnout.

Burnout—driven largely by documentation burden and inefficient EHR workflows—is a leading contributor to early retirements and staffing shortages. Agentic AI addresses these challenges by automating repetitive, low-value tasks, allowing physicians to reclaim time for direct patient care.

Studies show that agentic AI can automate documentation and administrative tasks, enabling physicians to spend more time with patients and less on clerical work. It can also manage functions such as medication outreach, scheduling, and patient education—helping to mitigate the primary care access crisis, where more than 100 million Americans lack consistent access.5

By suggesting best next actions for care in real time, agentic AI improves clinical accuracy, reduces workload, and preserves human judgment and empathy at the heart of medicine. Leaders who integrate AI into workflows—not simply bolt it on—can maximize its impact, reclaiming valuable physician time.

Ultimately, agentic AI augments clinical expertise, it does not replace it. Instead, it strengthens physicians’ capacity while restoring focus on meaningful patient care.

Myth #2: Patient Trust and Experience Will Be Undermined by AI

Patients may feel uneasy about AI involvement in their care, fearing depersonalization or diminished empathy. Historical skepticism toward machine-mediated decisions and recent studies showing concerns about physician credibility when AI is disclosed reinforce this perception.6, 7

Reality: Thoughtfully deployed agentic AI can enhance patient trust and experience.

When integrated into workflows with transparency and clinician oversight, agentic AI improves satisfaction, strengthens communication, and personalizes care delivery. McKinsey also reports that AI-powered personalization and proactive outreach are enabling healthcare organizations to design more tailored, engaging care journeys.8

Additionally, ambient documentation tools have been shown to improve the quality of provider-patient interactions by reducing the clerical burden and allowing clinicians to focus on patients.9

Myth #3: Agentic AI Is Just a Smarter Chatbot

A common misconception is that agentic AI is simply a more advanced chatbot. This belief stems from the prevalence of consumer-facing AI tools that provide static, scripted answers.

Reality: It’s not “chat”—it’s care orchestration.

Agentic AI can reason, plan, and act across care pathways. Unlike chatbots, which are limited to conversational responses, agentic AI coordinates care, monitors patients, and initiates actions when risks escalate.

For example, solutions can integrate directly with electronic health records (EHRs), clinical knowledge resources, and patient communication channels. This integration enables functions such as post-discharge outreach, care gap closure, and appointment preparation—extending patient engagement far beyond a chat interface.5

Research indicates that implementing artificial intelligence to identify patients at highest risk for readmission can help reduce quality gaps when combined with patient-centered interventions.10

Myth #4: It’s Too Risky to Deploy in Clinical Care

Concerns about safety and liability often prevent adoption of agentic AI. Healthcare leaders worry that autonomous AI could introduce new risks, increase malpractice exposure, or make errors without accountability. These fears are amplified by the novelty of AI regulation and uncertainty around how oversight should be implemented.

Reality: With proper oversight frameworks, agentic AI reduces—not increases—risk.

Agentic AI platforms designed with embedded safety guardrails, such as escalating cases only when risk thresholds are met or requiring clinician validation for high-stakes decisions—demonstrate that autonomy can coexist with strong governance.

This approach not only mitigates risk but also strengthens accountability, ensuring AI supports clinicians rather than replacing judgment.

AI-assisted recommendations were still physician-validated, yet improved diagnostic precision compared to clinicians alone, demonstrating that oversight plus AI enhances safety rather than undermines it.11

Ultimately, agentic AI augments clinical expertise, it does not replace it. Instead, it strengthens physicians’ capacity while restoring focus on meaningful patient care.

Myth #5: Adoption Is Just a Technology Challenge

Many healthcare leaders assume that adopting agentic AI is primarily a matter of installing the right software or integrating it into existing IT systems. This belief reflects the history of digital health rollouts, where challenges often centered on infrastructure, interoperability, and compliance.

Reality: Culture and trust matter more than code.

The true barrier to adoption isn’t technical, it’s cultural. Clinician trust, leadership sponsorship, and workflow alignment are far more critical than technical integration alone. Without transparency, training, and clear governance, clinicians may resist AI tools, even when those tools are technically sound.

However, successful implementations hinge on leaders—particularly CMIOs and CIOs—guiding structured change management processes, championing transparency, and building clinician confidence. In other words, adoption depends as much on organizational culture and leadership as on the underlying algorithms.

Myth #6: Agentic AI Models Require Constant Manual Retraining

A common belief is that AI systems inevitably degrade over time, requiring costly and frequent retraining. This perception comes from traditional machine learning models, which are static and must be periodically updated when data distributions shift—a process that is both labor-intensive and resource-heavy.

Reality: Unlike static models, agentic AI adapts continuously.

Agentic AI differs fundamentally from legacy models because it can learn and adjust dynamically through live clinician feedback and incoming patient data. Instead of requiring frequent “from-scratch” retraining cycles, agentic AI continuously refines its reasoning and recommendations in real-world settings.

This adaptability allows it to maintain and even improve accuracy over time, while reducing operational costs and downtime associated with retraining.

For example, solutions like Tom™ by Lumeris leverage years of clinical data and ongoing patient interactions to continuously adapt, helping clinicians address evolving care needs without constant manual retraining.5 This ensures that AI remains accurate, efficient, and clinically relevant in dynamic care environments.

Conclusion: Why Debunking These Myths Matters

The greatest leadership risk isn’t adopting agentic AI, it’s failing to separate myths from realities.

By dispelling misconceptions, healthcare leaders can unlock real benefits: improved diagnostic precision, reduced clinician burnout, proactive care beyond hospital walls, stronger governance and safety, seamless interoperability, and more trusted patient experiences. In short, debunking myths empowers leaders to deploy AI that strengthens care, efficiency, and trust today.

Schedule a Tom Demo

1. Deloitte. (2022). 2022 Deloitte survey of U.S. health care consumers and executives. Deloitte Insights. https://www.deloitte.com/us/en/insights/deloitte-insights-magazine/issue-33/ai-generated-health-information-us-consumers.html

2. athenahealth. (2025). AI in healthcare: Emerging evidence and outcomes. Athenahealth. https://www.athenahealth.com/resources/blog/use-ai-to-drive-efficiency

3. HealthLink Dimensions. (2025). AI adoption in healthcare: Trends and benefits. HealthLink Dimensions. https://healthlinkdimensions.com/physicians-on-ai

4. Petterson, S. M., et al. (2021). Projecting US primary care physician workforce needs. The Journal of the American Board of Family Medicine, 34 (S1), S33–S39. https://doi.org/10.3122/jabfm.2021.S1.200536

5. Lumeris. (2025). Tom™: An agentic AI for healthcare. Lumeris.

6. Longoni, C., Bonezzi, A., & Morewedge, C. K. (2019). Resistance to medical artificial intelligence. Journal of Consumer Research, 46(4), 629–650. https://doi.org/10.1093/jcr/ucz013

7. JAMA Network Open. (2025). Patient perceptions of physician credibility and AI disclosure in healthcare. JAMA Network Open.

8. McKinsey & Company. (2024). The state of AI in healthcare 2024. McKinsey & Company. https://www.mckinsey.com/capabilities/quantumblack/our-insights/the-state-of-ai-2024

9. Dallas Examiner. (2025). AI tools help doctors spend more time with patients. Dallas Examiner.https://dallasexaminer.com/parkland-health-centers-ai/

10. Romero-Brufau, S., Wyatt, K. D., Boyum, P., Mickelson, M., Moore, M., & Cognetta-Rieke, C. (2020). Implementation of artificial intelligence–based clinical decision support to reduce hospital readmissions at a regional hospital. BMJ Health & Care Informatics, 27(1), e100164. https://pmc.ncbi.nlm.nih.gov/articles/PMC7467834/

11. Business Insider. (2025). AI-assisted diagnosis improves precision in clinical practice. Business Insider.